https://www.tecmint.com/rsync-files-between-linux-and-windows

How to Use Rsync to Sync Files Between Linux and Windows Using (WSL)

Synchronizing files between Linux and Windows can seem challenging, especially if you’re not familiar with the tools available. However, with the Windows Subsystem for Linux (WSL), this process becomes much simpler.

WSL allows you to run a Linux environment directly on Windows, enabling you to use powerful Linux tools like Rsync to sync files between the two operating systems.

In this article, we’ll walk you through the entire process of using Rsync to sync files between Linux and Windows using WSL. We’ll cover everything from setting up WSL to writing scripts for automated syncing.

By the end, you’ll have a clear understanding of how to efficiently manage file synchronization across these two platforms.

What is Rsync?

Rsync (short for “remote synchronization“) is a command-line tool used to synchronize files and directories between two locations, which is highly efficient because it only transfers the changes made to files, rather than copying everything every time, which makes it ideal for syncing large files or large numbers of files.

Why Use Rsync with WSL?

- WSL allows you to run Linux commands and tools directly on Windows, making it easier to use Rsync.

- Rsync only transfers the differences between files, saving time and bandwidth.

- You can sync files between a Linux machine and a Windows machine effortlessly.

- Rsync can be automated using scripts, making it perfect for regular backups or syncing tasks.

Prerequisites

Before we begin, ensure you have the following:

- WSL is supported on versions of Windows 10 and 11.

- You need to have WSL installed and set up on your Windows machine.

- Install a Linux distribution (e.g., Ubuntu) from the Microsoft Store.

- Rsync is usually pre-installed on Linux distributions, but we’ll cover how to install it if it’s not.

- Rsync uses SSH to securely transfer files between systems.

Step 1: Install and Set Up WSL

If you haven’t already installed WSL, then open PowerShell as administrator by pressing Win + X and selecting “Windows PowerShell (Admin)” or “Command Prompt (Admin)” and run the following command to install WSL.

wsl --install

This command installs WSL and the default Linux distribution (usually Ubuntu). After installation, restart your computer to complete the setup.

Once your computer restarts, open the installed Linux distribution (e.g., Ubuntu) from the Start menu. Follow the on-screen instructions to create a user account and set a password.

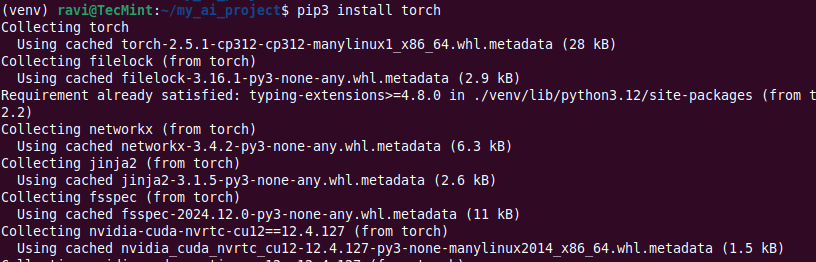

Step 2: Install Rsync on WSL

Rsync is usually pre-installed on most Linux distributions. However, if it’s not installed, you can install it using the following commands.

sudo apt update sudo apt install rsync rsync --version

This should display the installed version of Rsync.

Step 3: Set Up SSH on WSL

To enable SSH on WSL, you need to install the OpenSSH server.

sudo apt install openssh-server

Next, start and enable the SSH service to start automatically every time you launch WSL.

sudo service ssh start sudo systemctl enable ssh

Verify that the SSH service is running.

sudo service ssh status

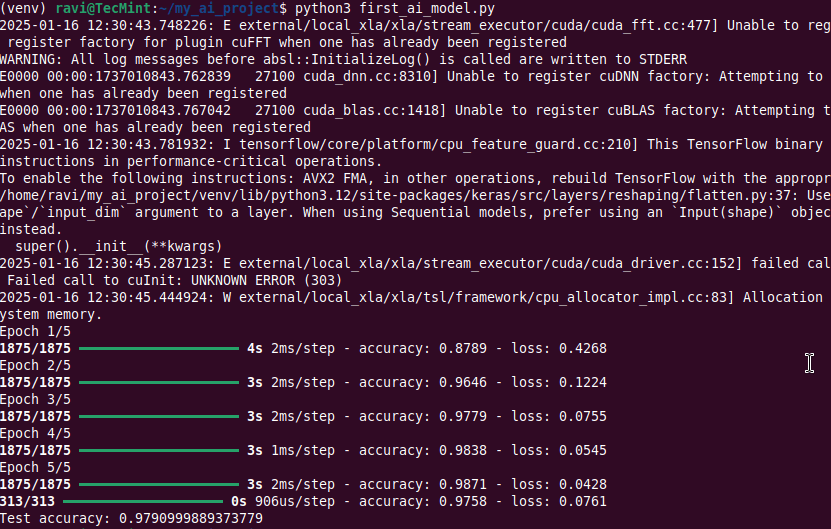

Step 4: Sync Files from Linux (WSL) to Windows

Now that Rsync and SSH are set up, you can start syncing files. Let’s say you want to sync files from your WSL environment to a directory on your Windows machine.

Launch your Linux distribution (e.g., Ubuntu) and identify the Windows directory, which typically mounted under /mnt/. For example, your C: drive is located at /mnt/c/.

Now run the following command to sync files from your WSL directory to a Windows directory:

rsync -avz /path/to/source/ /mnt/c/path/to/destination/

Explanation of the command:

-a: Archive mode (preserves permissions, timestamps, and symbolic links).-v: Verbose mode (provides detailed output).-z: Compresses data during transfer./path/to/source/: The directory in your WSL environment that you want to sync./mnt/c/path/to/destination/: The directory on your Windows machine where you want to sync the files.

Step 5: Sync Files from Windows to Linux (WSL)

If you want to sync files from a Windows directory to your WSL environment, you can use a similar command:

rsync -avz /mnt/c/path/to/source/ /path/to/destination/

Explanation of the command:

/mnt/c/path/to/source/: The directory on your Windows machine that you want to sync.- /

path/to/destination/: The directory in your WSL environment where you want to sync the files.

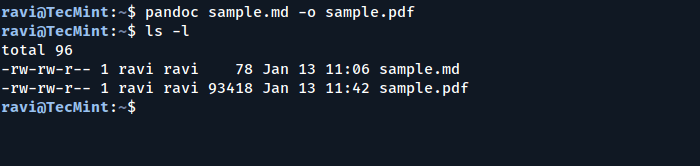

Step 6: Automate Syncing with a Script

To make syncing easier, you can create a bash script to automate the process.

nano sync.sh

Add the following lines to the script:

#!/bin/bash rsync -avz /path/to/source/ /mnt/c/path/to/destination/

Save the file and make the script executable:

chmod +x sync.sh

Execute the script to sync files.

./sync.sh

You can use cron to schedule the script to run at specific intervals. For example, to run the script every day at 2 AM, add the following line to your crontab:

0 2 * * * /path/to/sync.sh

Conclusion

Using Rsync with WSL is a powerful and efficient way to sync files between Linux and Windows. By following the steps outlined in this article, you can easily set up Rsync, configure SSH, and automate file synchronization.