https://www.tecmint.com/logkeys-monitor-keyboard-keystroke-linux

LogKeys: Monitor Keyboard Keystrokes in Linux

Keylogging, short for “keystroke logging” is the process of recording the keys struck on a keyboard, usually without the user’s knowledge.

Keyloggers can be implemented via hardware or software:

- Hardware keyloggers intercept data at the physical level (e.g., between the keyboard and computer).

- Software keyloggers, like LogKeys, capture keystrokes through the operating system.

This article explains how to use a popular open-source Linux keylogger called LogKeys for educational or testing purposes only. Unauthorized use of keyloggers to monitor someone else’s activity is unethical and illegal.

What is LogKeys?

LogKeys is an open-source keylogger for Linux that captures and logs keyboard input, including characters, function keys, and special keys. It is designed to work reliably across a wide range of Linux systems without crashing the X server.

LogKeys also correctly handles modifier keys like Alt and Shift, and is compatible with both USB and serial keyboards.

While there are numerous keylogger tools available for Windows, Linux has fewer well-supported options. Although LogKeys has not been actively maintained since 2019, it remains one of the more stable and functional keyloggers available for Linux as of today.

Installation of Logkeys in Linux

If you’ve previously installed Linux packages from a tarball (source), you should find installing the LogKeys package straightforward.

However, if you’ve never built a package from source before, you’ll need to install some required development tools first, such as C++ compilers and GCC libraries, before proceeding.

Installing Prerequisites

Before building LogKeys from source, ensure your system has the required development tools and libraries installed:

On Debian/Ubuntu:

sudo apt update sudo apt install build-essential autotools-dev autoconf kbd

On Fedora/CentOS/RHEL:

sudo dnf install automake make gcc-c++ kbd

On openSUSE:

sudo zypper install automake gcc-c++ kbd

On Arch Linux:

sudo pacman -S base-devel kbd

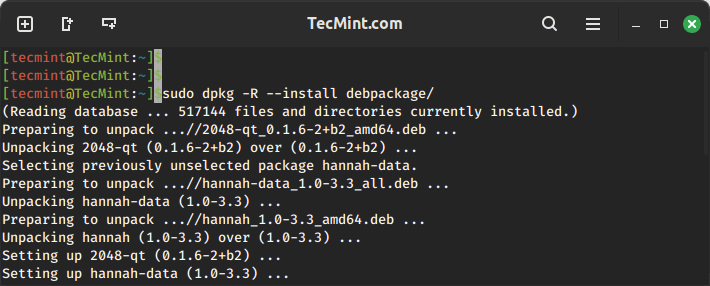

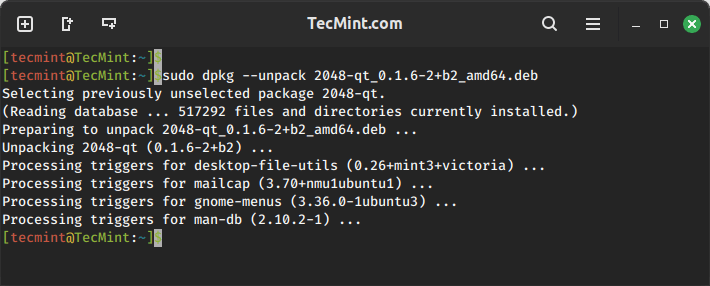

Installing LogKeys from Source

First, download the latest LogKeys source package using the wget command, then, extract the ZIP archive and navigate into the extracted directory:

wget https://github.com/kernc/logkeys/archive/master.zip unzip master.zip cd logkeys-master/

or clone the repository using Git, as shown below:

git clone https://github.com/kernc/logkeys.git cd logkeys

Next, run the following commands to build and install LogKeys:

./autogen.sh # Generate build configuration scripts cd build # Switch to build directory ../configure # Configure the build make # Compile the source code sudo make install # Install binaries and man pages

If you encounter issues related to keyboard layout or character encoding, regenerate your locale settings:

sudo locale-gen

Usage of LogKeys in Linux

Once LogKeys is installed, you can begin using it to monitor and log keyboard input using the following commands.

Start Keylogging

This command starts the keylogging process, which must be run with

superuser (root) privileges because it needs access to low-level input

devices. Once started, LogKeys begins recording all keystrokes and saves them to the default log file: /var/log/logkeys.log.

Note: You won’t see any output in the terminal; logging runs silently in the background.

sudo logkeys --start

Stop Keylogging

This command terminates the keylogging process that was started earlier, which is important to stop LogKeys when you’re done, both to conserve system resources and to ensure the log file is safely closed.

sudo logkeys --kill

Get Help / View Available Options

The follwing command will displays all available command-line options and flags you can use with LogKeys.

logkeys --help

Useful options include:

--start: Start the logger--kill: Stop the logger--output <file>: Specify a custom log output file--no-func-keys: Don’t log function keys (F1-F12)--no-control-keys: Skip control characters (e.g.,Ctrl+C,Backspace)

View the Logged Keystrokes

The cat command displays the contents of the default log file where LogKeys saves keystrokes.

sudo cat /var/log/logkeys.log

You can also open it with a text editor like nano or less:

sudo nano /var/log/logkeys.log or sudo less /var/log/logkeys.log

Uninstall LogKeys in Linux

To remove LogKeys from your system and clean up the installed binaries, manuals, and scripts, use the following commands:

cd build sudo make uninstall

This will remove all files that were installed with make install, including the logkeys binary and man pages.

Conclusion

LogKeys is a powerful keylogger for Linux that enables users to monitor keystrokes in a variety of environments. Its compatibility with modern systems and ease of installation make it a valuable tool for security auditing, parental control testing, and educational research.

However, it’s crucial to emphasize that keylogging should only be used in ethical, lawful contexts—such as with explicit user consent or for personal system monitoring. Misuse can lead to serious legal consequences. Use responsibly and stay informed.