Conventional wisdom has it that if you want to make use of "the cloud," you've got to use someone else's service -- Amazon's EC2, Google's clouds, and so on.

Canonical, through its new edition of Ubuntu Server, has set out to change all that. Instead of using someone else's cloud, it's now possible to set up your own cloud -- to create your own elastic computing environment, run your own applications on it, and even connect it to Amazon EC2 and migrate it outwards if need be.

A DIY Private Cloud

Ubuntu Enterprise Cloud, or UEC for short, lets you create your own cloud computing infrastructure with nothing more than whatever commodity hardware you've got that already runs Ubuntu Server.

It's an implementation of the Eucalyptus cloud-computing architecture, which is interface-compatible with Amazon's own cloud system, but could, in theory, support interfaces for any number of cloud providers.

Since Amazon's APIs and cloud systems are broadly used and familiar to most people who've done work with the cloud, it makes sense to start by offering what people already know.

A UEC setup consists of a front-end computer -- a "controller" -- and one or more "node" systems. The nodes use either KVM or Xen virtualization technology, your choice, to run one or more system images.

Xen was the original virtualization technology with KVM a recent addition, but that doesn't mean one is being deprecated in favor of the other.

If you're a developer for either environment, or you simply have more proficiency in KVM vs. Xen (or vice versa), your skills will come in handy either way.

Keep in mind you can't just use any old OS image, or any old Linux image for that matter. It has to be specially prepared for use in UEC.

As of this writing Canonical has provided a few basic system imagesthat ought to cover the most common usage or deployment scenarios.

Hardware Requirements

Note also that the hardware you use needs to meet certain standards. Each of the node computers needs to be able to perform hardware-accelerated virtualization via the Intel VT spec. (If you're not sure, ZDNet columnist Ed Bott has compiled a helpful shirt-pocket list of recent Intel CPUs that support VT.

The node controller does not need to be VT-enabled, but it helps. In both cases, a 64-bit system is strongly recommended. Both nodes and node controller should be dedicated systems: they should not be used for any other functionality.

The computers in question also need to meet certain memory and performance standards. Canonical's recommendationsare 512MB - 2GB of memory and 40GB - 200GB for the controller, and 1 - 4GB of RAM and 40 - 100GB of storage for the nodes.

It probably goes without saying that you'll want the fastest possible network connection between all elements: gigabit Ethernet whenever possible, since you'll be moving hundreds of megabytes of data among the nodes, the controller, and the outside.

Finally, you'll need at least one machine that can talk to the server over an HTTP connection to gain access to Eucalyptus's admin console.

This can be any machine that has a Web browser, but for the initial stage of the setup it's probably easiest to use an Ubuntu desktop client system.

The scripts and other client-end technology provided all run on Ubuntu anyway, so that may be the best way to at least get the cloud's basic functionality up and running.

Implementation

Setting up a UEC instance is a bit more involved than just setting up a cluster, although some of the same procedures apply.

It's also not a good idea to dive into this without some existing understanding of Linux (obviously), Ubuntu specifically (naturally) and cloud computing concepts in general, as well.

The first thing to establish before touching any hardware is the network topology. All the machines in question -- controller, nodes, and client-access machines -- should be able to see each other on the same network segment.

Canonical advises against allowing another DHCP server to assign these machines addresses since the node controller can handle that duty.

I've found you can get away with having another DHCP server, as long as the IP assignments are consistent (e.g., the same MAC is consistently given the same IP address).

The actual OS installation is extremely simple. Download the Ubuntu Server 9.10 installation media, burn it to disc or place it on a flash drive (the latter is markedly faster), boot it, and at the installation menu select "Install Ubuntu Enterprise Cloud." You'll be prompted during the setup for a "Cloud installation mode"; select "Cluster" for the cloud controller. (You can optionally enter a list of IP addresses to be allocated for node instances.)

After the cluster controller is up and running, set up the nodes in the same way. At the "Cloud installation mode" menu it should autodetect the presence of the cloud controller and select "Node."

If it doesn't do this, that's the first sign something went wrong -- odds are for whatever reason the machines can't see each other on the network, and you should do some troubleshooting in that regard before moving on.

Once all the nodes are in place, you'll need to run the euca_conf command on the node controller. This insures that the controller can see each node, and allow it to be added to the list of nodes available.

The controller doesn't automatically discover and add nodes; this is a manual process for the sake of security. (If you have more than one cluster on a single network segment, you can quickly see why this is a smart idea.)

A UEC cluster doesn't do anything by itself. It's just a container, inside which you place any number of virtual machine images.

Virtual machine images for UEC have a specific format, too. You can't simply copy a disk image or .ISO and boot that into a UEC cluster, as you might be able to with a virtualization product like VMware or VirtualBox.

Instead, you have to "package" the kernel and a few other components in a certain way, and then feed that package to the cluster.

Canonical has several of these images already available. They're representative of the most common Linux distributions out there, so most everyone should be able to find something that matches what they need or already use.

The process for uploading a kernel bundle is a multi-step method that you should take as slowly and gradually as possible.

In fact, you may be best off taking the instructions described on the page, copying them out, and turning them into a shell script.

That way, you won't be at the mercy of typing the wrong filename or passing the wrong flags -- and not just once, but potentially over and over.

The same goes for the scripts used to build custom images (read on for more on that).

Finally, once an image has been uploaded and prepared, the administrator can start the instance through one of Eucalyptus's command-line scripts.

Note that an image might take some time to boot depending on the hardware configuration (and whatever else might also be running on the cluster), so you might see the image show up as "pending" when you use the euca-describe-instances command to list running instances.

Walrus And Amazon S3

Those who've used Amazon's EC2 will be familiar with Amazon S3. That's the storage service provider for EC2, which lets you preserve data in a persistent fashion for use in the cloud.

Eucalyptus has a similar technology, Walrus, which is interface-compatible with S3.

If you're familiar with S3 and have already written software that makes use of it, retooling said software for Walrus shouldn't be too difficult.

They use many of the same command-line functions -- e.g., S3'simplementation of Curl -- but you can also use Amazon's own EC2 API/AMI toolset to talk to Walrus as if it were Amazon's own repositories.

Note that Walrus and S3 have a few functional differences. You can't, for instance, yet perform virtual hosting of buckets, a feature typically used for serving multiple sites from a single server.

This is something that's probably more useful on Amazon's services than in your own cloud, so it's not terribly surprising that Walrus doesn't support it (yet).

Making Your Own Image

I mentioned before that you can't just use any old OS with UEC; you have to supply it with a specially-prepared operating system image.

Canonical has a few, but if you want to create your own image, you can.

As you can guess, this isn't a trivial process. You need to provide a kernel, an optional ramdisk (for the system to boot to), and a virtual-machine image generated using the vmbuilder tool ().

It's also possible to use the RightScale, a cloud management service that works with Amazon, RackSpace, and GoGrid as well as Eucalyptus-style clouds.

Obviously you'll need a RightScale account to take advantage of this feature, but the basic single-account version of RightScale is free, and has enough of the feature set to give you a feel for what it's all about.

The Future

So what's next for Eucalyptus on Ubuntu? One possibility that presents itself is using Eucalyptus as an interface between multiple cloud architectures.

The folks at Canonical have not planned anything like this, but the potential is there: Eucalyptus can, in theory, talk to any number of cloud architectures, and could serve as an intermediary between them -- a possible escape route for clouds that turn out to be a little too proprietary for their own good.

Another, more practical possibility is more in the realm of a feature request -- that the existing process for creating, packaging, uploading and booting system images could be automated that much more.

Perhaps the various tools could be pulled together and commanded from a central console, so the whole thing could be done in an interactive, stepwise manner.

What Eucalyptus and UEC promise most immediately, though, is a way to take existing commodity hardware and make it elastic without sacrificing outwards expansion.

What you create with UEC doesn't have to stay put, and that's a big portion of its appeal.

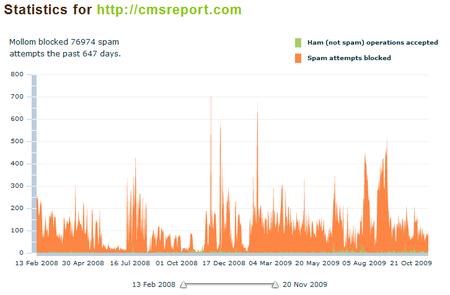

I was first introduced to Mollom in the Fall of 2007 as a

I was first introduced to Mollom in the Fall of 2007 as a