http://freedompenguin.com/articles/how-to/schedule-fios-router-reboots-with-a-pogoplug

There are few things in life more irritating than having your

Internet go out. This is often caused by your router needing a reboot.

Sadly, not all routers are created equal which complicates things a bit.

At my home for example, we have FIOS Internet. My connection from my

ONT to my FIOS router is through coaxial (coax cable). Why does this

matter? Because if I was connected to CAT6 from my ONT, I could use the

router of my choosing. Sadly a coaxial connection doesn’t easily afford

me this opportunity.

So why don’t I just switch my FIOS over to CAT6 instead of using the coaxial cable? Because I have no interest in running the CAT6 throughout my home. This means I must get the most out of my ISP provided router as possible.

2) This router has a small NAT table that means frequent rebooting is needed.

Thankfully, I’m pretty good at coming up with reliable solutions. To tackle the first issue, I simply turned off the wifi portion of the Actiontec router. This allowed me to connect to my own personal WiFi instead. As for the second problem, this was a bit trickier. Having tested the “Internet Only Bridge” approach for the Actiontec and watching it fail often, I finally settled on using my own personal router as a switch instead. It turned out to be far more reliable and I wasn’t having to mess with it every time my ISP renewed a new IP address. Trust me when I say I’m well aware of ALL of the options and this is what works best for me. Okay, moving on.

Automatic rebooting

As reliable as my current setup is, there is still the issue of the small NAT table with the Actiontec. Being the sort of person who likes simple, I usually just reboot the router when things start slowing down. It’s rarely needed, however getting to the box is a pain in the butt.

This lead me on a mission: how can I automatically reboot my router without buying any extra hardware? I’m on a budget, so simply buying one of those IP-enabled remote power switches wasn’t something I was going to do. After all, if the thing stops working, I’m left with a useless brick.

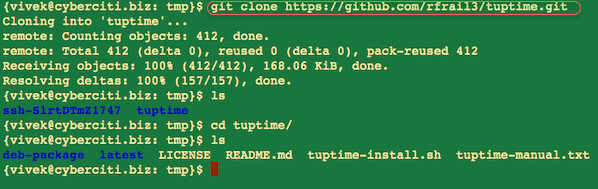

Instead, I decided to build my own. Looking around in my “crap box”, I discovered two Pogoplugs I had forgotten about. These devices provide photo backup and sharing for the less tech savvy among us. All I need to do was install Linux onto the Pogoplug device.

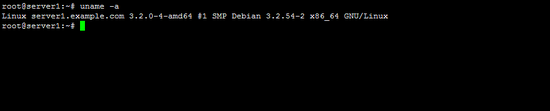

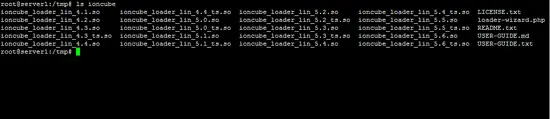

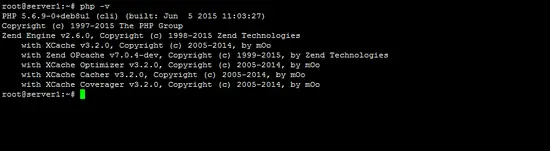

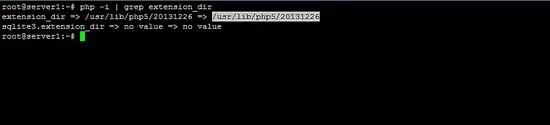

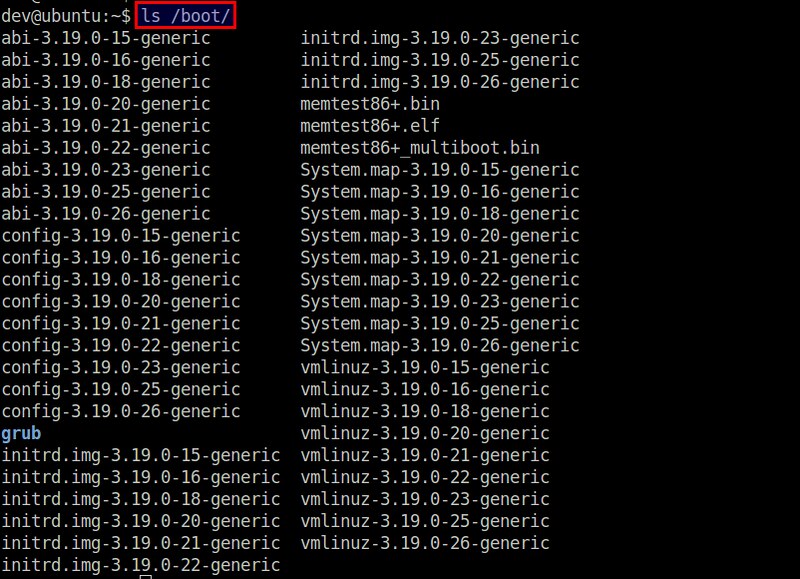

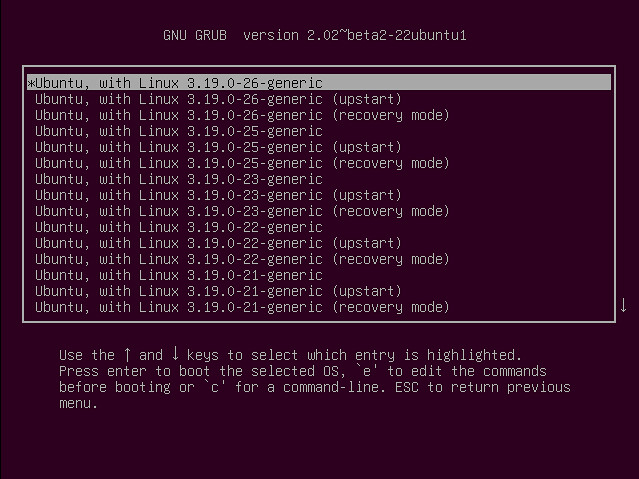

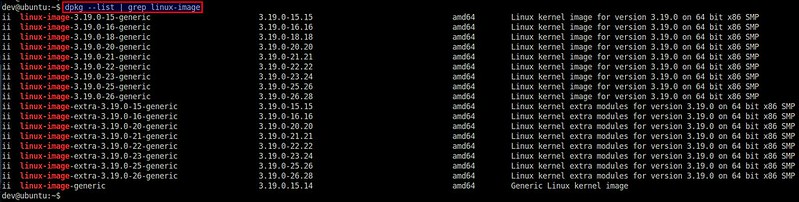

Installing Debian onto the Pogoplug

I should point out that most of the tutorials regarding installing Debian (or any Linux distro) onto a Pogoplug are missing information, half-wrong and almost certain to brick the device. After extensive research I found a tutorial that provides complete, accurate information. Based on that research, I recommend using the tutorial for the Pogoplug v4 (both Series 4 and Mobile). If you try out the linked tutorial on other Pogoplug models you will “brick” the Pogoplug.

Getting started: When running the curl command (for dropbear), if you are getting errors – leave the box plugged in and Ethernet connected for at least an hour. If you continue to see the error: “pogoplug curl: (7) Failed to connect to”, then you need to contact Pogoplug to have them de-register the device.

If installing Debian on the Pogoplug sounds scary or you’ve already got a Raspberry Pi running Linux that you’re not using, then you’re ready for the next step.

Setting up your router reboot box

(Hat tip to Verizon Forums)

Go ahead and SSH into your newly tweaked Pogoplug. Next, you’re going to want to install a package called “expect.” Assuming you’re not running as root, we’ll be using “sudo” for this demonstration. I first discovered this concept on the Verizon forums last year. Even though it was scripted for a Pi, I found it also works great on the Pogoplug. SSH into your Pogoplug:

Next, run nano in a terminal and paste in the following contents, edit any mention of your /home/non-root-username/ and your router’s IP LAN address to match your personal details.

Now name the file verizonrouterreboot.expect and save it. You’ll note that we’re saving this in your /home/non-root-username/

directory. You could call the file anything you like, but for the sake

of consistency, I’m sticking with the file names as I have them.

The file we just created accesses the router via telnet (locally), then using hard returns (\r) is logging into the router and rebooting it. Clearly this file on it’s own would be annoying, since executing it just reboots your router. However it does provide the executable for our next file so that we can automate when we want it to run.

Let’s open nano in the same directory and paste in the following contents:

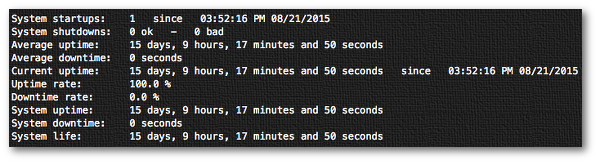

Now save this file as verizonrouterreboot.sh so it can provide you with a log file and run your expect script.

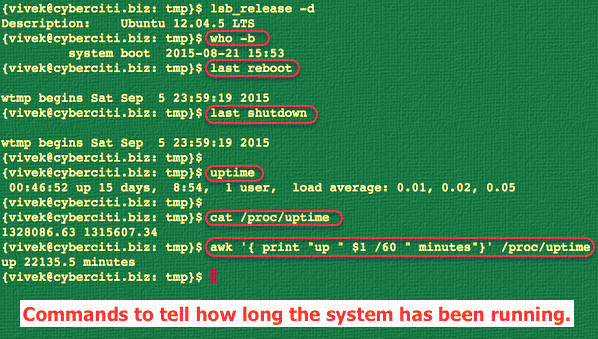

Save this file as pingme.sh and it will make sure

you’ll never have to go fishing for the power outlet ever again. This

script is designed to ping an OpenDNS server on a set schedule

(explained shortly). If the ping fails, it then runs the reboot script.

Before I wrap this up, there are two things that must still be done to make this work. First, we need to make sure these files can be executed.

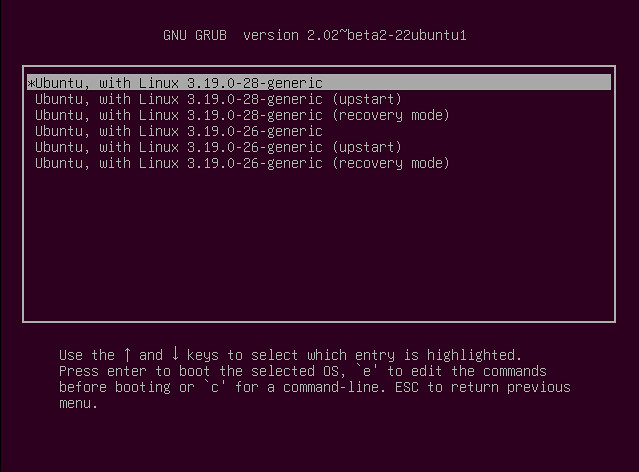

Now that our scripts are executable, the next step is to schedule the

scripts on their appropriate schedules. My recommendation is to

schedule verizonrouterreboot.sh at a time when no one

is using the computer, say at 4am. And I recommend running “pingme”

every 30 minutes. After all, who wants to be without the Internet for

more than 30 minutes? You can setup a cron job and then verify your schedule is set up correctly.

Are you a cable Internet user?

You are? That’s awesome! As luck would have it, I’m working on two different approaches for automatically rebooting cable modems. If you use a cable modem and would be interested in helping me test these techniques out, HIT THE COMMENTS and let’s put our heads together. Let me know if you’re willing to help me do some testing!

I need to be able to test both the “telnet method” and the “wget to url” method with your help. Ideally if both work, this will cover most cable modem types and reboot methods.

So why don’t I just switch my FIOS over to CAT6 instead of using the coaxial cable? Because I have no interest in running the CAT6 throughout my home. This means I must get the most out of my ISP provided router as possible.

What is so awful about using the Actiontec router?1) The Actiontec router overheats when using wifi and router duties.

2) This router has a small NAT table that means frequent rebooting is needed.

Thankfully, I’m pretty good at coming up with reliable solutions. To tackle the first issue, I simply turned off the wifi portion of the Actiontec router. This allowed me to connect to my own personal WiFi instead. As for the second problem, this was a bit trickier. Having tested the “Internet Only Bridge” approach for the Actiontec and watching it fail often, I finally settled on using my own personal router as a switch instead. It turned out to be far more reliable and I wasn’t having to mess with it every time my ISP renewed a new IP address. Trust me when I say I’m well aware of ALL of the options and this is what works best for me. Okay, moving on.

Automatic rebooting

As reliable as my current setup is, there is still the issue of the small NAT table with the Actiontec. Being the sort of person who likes simple, I usually just reboot the router when things start slowing down. It’s rarely needed, however getting to the box is a pain in the butt.

This lead me on a mission: how can I automatically reboot my router without buying any extra hardware? I’m on a budget, so simply buying one of those IP-enabled remote power switches wasn’t something I was going to do. After all, if the thing stops working, I’m left with a useless brick.

Instead, I decided to build my own. Looking around in my “crap box”, I discovered two Pogoplugs I had forgotten about. These devices provide photo backup and sharing for the less tech savvy among us. All I need to do was install Linux onto the Pogoplug device.

Why would someone choose a Pogoplug vs a Rasberry Pi? Easy, the Pogoplugs are “stupid cheap.” According to the current listings on Amazon, a Pi Model B+ is $32 and a Pi 2 will run $41 USD. Compare that to $10 for a new Pogoplug and it’s obvious which option makes the most sense. I’d much rather free up my Pi for other duties than merely managing my router’s ability to reboot itself.

Installing Debian onto the Pogoplug

I should point out that most of the tutorials regarding installing Debian (or any Linux distro) onto a Pogoplug are missing information, half-wrong and almost certain to brick the device. After extensive research I found a tutorial that provides complete, accurate information. Based on that research, I recommend using the tutorial for the Pogoplug v4 (both Series 4 and Mobile). If you try out the linked tutorial on other Pogoplug models you will “brick” the Pogoplug.

Getting started: When running the curl command (for dropbear), if you are getting errors – leave the box plugged in and Ethernet connected for at least an hour. If you continue to see the error: “pogoplug curl: (7) Failed to connect to”, then you need to contact Pogoplug to have them de-register the device.

If installing Debian on the Pogoplug sounds scary or you’ve already got a Raspberry Pi running Linux that you’re not using, then you’re ready for the next step.

Setting up your router reboot box

(Hat tip to Verizon Forums)

Important: After you’ve installed Debian onto your Pogoplug v4 (or setup your existing Rasberry Pi instead), you would be wise to consider setting up a common non-root user for casual SSH sessions. Even though this is behind your router’s firewall, you’re still running a Linux box as root with various open ports.First up, login to your Actiontec MI424WR (or similar) FIOS router, browse to Advanced, click Yes to acknowledge the warning, then click on Local Administration on the bottom left. Check “Using Primary Telnet Port (23)” and hit Apply. This is for local administration only and is not to be confused with Remote Administration settings.

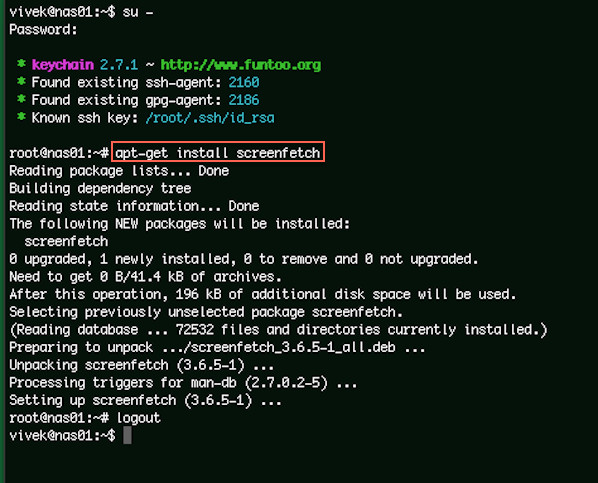

Go ahead and SSH into your newly tweaked Pogoplug. Next, you’re going to want to install a package called “expect.” Assuming you’re not running as root, we’ll be using “sudo” for this demonstration. I first discovered this concept on the Verizon forums last year. Even though it was scripted for a Pi, I found it also works great on the Pogoplug. SSH into your Pogoplug:

- cd /home/non-root-username/

- sudo apt-get install expect -y

- spawn telnet 192.168.1.1

- expect "Username:"

- send "admin\r"

- expect "Password:"

- send "ACTUAL-ROUTER-password\r"

- expect "Wireless Broadband Router> "

- sleep 5

- send "system reboot\r"

- sleep 5

- send "exit\r"

- close

- sleep 5

- exit

The file we just created accesses the router via telnet (locally), then using hard returns (\r) is logging into the router and rebooting it. Clearly this file on it’s own would be annoying, since executing it just reboots your router. However it does provide the executable for our next file so that we can automate when we want it to run.

Let’s open nano in the same directory and paste in the following contents:

- {

- cd /home/non-root-username/

- expect -f verizonrouterreboot.expect

- echo "\r"

- } 2>&1 > /home/non-root-username/verizonrouterreboot.log

- echo "Nightly Reboot Successful: $(date)" >> /home/non-root-username/successful.log

- sleep 3

- exit

As an added bonus, I’m going to also provide you with a script that will reboot the router if the Internet goes out or the router isn’t connecting with your ISP.Once again, open up nano in the same directory and drop the following into it:

- #!/bin/bash

- if ping -c 1 208.67.220.220

- then

- : # colon is a null and is required

- else

- /home/non-root-username/verizonrouterreboot.sh

- fi

Before I wrap this up, there are two things that must still be done to make this work. First, we need to make sure these files can be executed.

- chmod +x /verizonrouterreboot.sh

- chmod +x verizonrouterreboot.expect

- chmod +x pingme.sh

Are you a cable Internet user?

You are? That’s awesome! As luck would have it, I’m working on two different approaches for automatically rebooting cable modems. If you use a cable modem and would be interested in helping me test these techniques out, HIT THE COMMENTS and let’s put our heads together. Let me know if you’re willing to help me do some testing!

I need to be able to test both the “telnet method” and the “wget to url” method with your help. Ideally if both work, this will cover most cable modem types and reboot methods.