http://opensource.com/life/12/10/NASA-achieves-data-goals-Mars-rover-open-source-software

Since the landing of NASA’s rover, Curiosity, on Mars on August 11th (Earth time), I have been following the incredible wealth of images that have been flowing back. I am awestruck by the breadth and beauty of the them.

The technological challenge of Curiosity sending back enormous amounts of data has, in my opinion, not been fully appreciated. From NASA reports, we know that Curiosity was sending back 'low level resolution' data (1,200 x 1,200 pixels) until it went through a software "brain transplant" and is now providing even more detailed and modifiable data.

How is this getting done so efficiently and distributed so effectively?

One recent story highlighted the 'anytime, anywhere' availability of Curiosity’s exploration that is handling "hundreds of gigabits/second of traffic for hundreds of thousands of concurrent viewers." Indeed, as the blog post from the cloud provider, Amazon Web Services (AWS), points out: "The final architecture, co-developed and reviewed across NASA/JPL and Amazon Web Services, provided NASA with assurance that the deployment model could cost-effectively scale, perform, and deliver an incredible experience of landing on another planet. With unrelenting goals to get the data out to the public, NASA/JPL prepared to service hundreds of gigabits/second of traffic for hundreds of thousands of concurrent viewers."

This is certainly evidence of the growing role that the cloud plays in real-time, reliable availability.

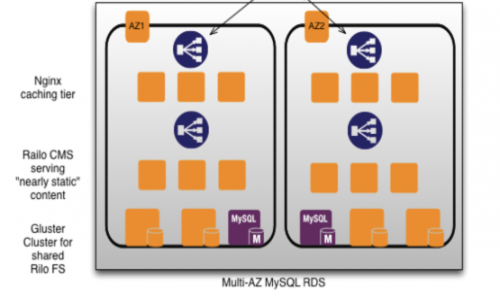

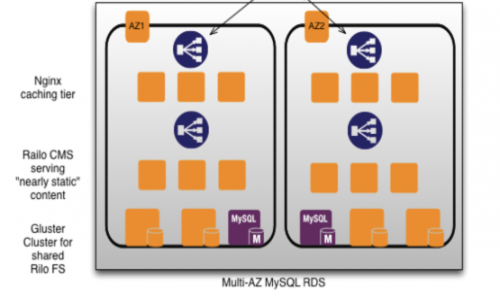

But, dig beneath the hood of this story—and the diagram included—and you’ll see another story. One that points to the key role of open source software in making this phenomenal mission work and the results available to so many, so quickly.

Here’s the diagram I am referring to:

If you look at the technology stack, you’ll see that at each level open source is key to achieving NASA’s mission goals. Let’s look at each one:

Among the known high-visiblity sites powered by Nginx, according to its website, are Netflix, Hulu, Pinterest, CloudFlare, Airbnb, WordPress.com, GitHub, SoundCloud, Zynga, Eventbrite, Zappos, Media Temple, Heroku, RightScale, Engine Yard and NetDNA.

I suspect that, like myself, the cascade of visual images from this unique exploration has sparked for yet another generation the mystery and immense challenge of life beyond our own planet. And what a change from the grainy television transmissions of the first moon landing, 43 years ago this summer (at least for those who are in a position to remember it!). Even 20 years ago, the delays, the inferior quality, and the narrow bandwidth of the data that could be analyzed stands in stark contrast to what is being delivered right now and for the next few years from this one mission.

Taken together, the combination of cloud and open source enabled the Curiosity mission to provide these results in real time, not months delayed; at high quality, not "good enough" quality. A traditional, proprietary approach would not have been this successful, given the short time to deployment and shifting requirements that necessitated the ultimate in agility and flexibility. NASA/JPL are to be commended. And while there was one cloud offering involved, "it really could have been rolled with any number of other solutions," as the story cited at the beginning of this post notes.

As policy makers and technology strategists continue their focus on 'big data', the mission of Curiosity will provide some important lessons. One key take away: open source has been key to the success of this mission and making its results as widely available as possible in so quickly a time frame.

Since the landing of NASA’s rover, Curiosity, on Mars on August 11th (Earth time), I have been following the incredible wealth of images that have been flowing back. I am awestruck by the breadth and beauty of the them.

The technological challenge of Curiosity sending back enormous amounts of data has, in my opinion, not been fully appreciated. From NASA reports, we know that Curiosity was sending back 'low level resolution' data (1,200 x 1,200 pixels) until it went through a software "brain transplant" and is now providing even more detailed and modifiable data.

How is this getting done so efficiently and distributed so effectively?

One recent story highlighted the 'anytime, anywhere' availability of Curiosity’s exploration that is handling "hundreds of gigabits/second of traffic for hundreds of thousands of concurrent viewers." Indeed, as the blog post from the cloud provider, Amazon Web Services (AWS), points out: "The final architecture, co-developed and reviewed across NASA/JPL and Amazon Web Services, provided NASA with assurance that the deployment model could cost-effectively scale, perform, and deliver an incredible experience of landing on another planet. With unrelenting goals to get the data out to the public, NASA/JPL prepared to service hundreds of gigabits/second of traffic for hundreds of thousands of concurrent viewers."

This is certainly evidence of the growing role that the cloud plays in real-time, reliable availability.

But, dig beneath the hood of this story—and the diagram included—and you’ll see another story. One that points to the key role of open source software in making this phenomenal mission work and the results available to so many, so quickly.

Here’s the diagram I am referring to:

If you look at the technology stack, you’ll see that at each level open source is key to achieving NASA’s mission goals. Let’s look at each one:

Nginx

Nginx (pronounced engine-x) is a free, open source, high-performance HTTP server and reverse proxy, as well as an IMAP/POP3 proxy server. As a project, it has been around for about ten years. According to their website, Nginx now hosts nearly 12.18% (22.2M) of active sites across all domains. Nginx is generally regarded as a preeminent webserver for delivering content fast due to "its high performance, stability, rich feature set, simple configuration, and low resource consumption." Unlike traditional servers, Nginx doesn't rely on threads to handle requests. Instead it uses a much more scalable, event-driven (asynchronous) architecture. This architecture uses small, but more importantly, predictable amounts of memory under load.Among the known high-visiblity sites powered by Nginx, according to its website, are Netflix, Hulu, Pinterest, CloudFlare, Airbnb, WordPress.com, GitHub, SoundCloud, Zynga, Eventbrite, Zappos, Media Temple, Heroku, RightScale, Engine Yard and NetDNA.

Railo CMS

Railo is an open source content management system (CMS) software which implements the general-purpose CFML server-side scripting language. If you're familiar with Drupal, think mega-Drupal and that is Railo. It has recently been accepted as part of Jboss.org and runs on the Java Virtual Machine (JVM). It is often used to create dynamic websites, web applications and intranet systems. CFML is a dynamic language supporting multiple programming paradigms.GlusterFS

Perhaps the most important piece of this high-demand configuration, GlusterFS is an open source, distributed file system capable of scaling to several petabytes (actually, 72 brontobites!) and handling thousands of clients. GlusterFS clusters together storage building blocks over Infiniband RDMA or TCP/IP interconnect, aggregating disk and memory resources and managing data in a single global namespace. GlusterFS is based on a stackable user space design and can deliver exceptional performance for diverse workloads. It is especially adept at replicating big data across multiple platforms, allowing users to analyze the data via their own analytical tools. This technology is used to power the personalized radio service Pandora and the cloud content services company Brightcove, and by NTTPC for its cloud storage business. (In 2011, Red Hat acquired the open source software company, Gluster, which supports the GlusterFS upstream community; the product is now known as Red Hat Storage Server.)I suspect that, like myself, the cascade of visual images from this unique exploration has sparked for yet another generation the mystery and immense challenge of life beyond our own planet. And what a change from the grainy television transmissions of the first moon landing, 43 years ago this summer (at least for those who are in a position to remember it!). Even 20 years ago, the delays, the inferior quality, and the narrow bandwidth of the data that could be analyzed stands in stark contrast to what is being delivered right now and for the next few years from this one mission.

Taken together, the combination of cloud and open source enabled the Curiosity mission to provide these results in real time, not months delayed; at high quality, not "good enough" quality. A traditional, proprietary approach would not have been this successful, given the short time to deployment and shifting requirements that necessitated the ultimate in agility and flexibility. NASA/JPL are to be commended. And while there was one cloud offering involved, "it really could have been rolled with any number of other solutions," as the story cited at the beginning of this post notes.

As policy makers and technology strategists continue their focus on 'big data', the mission of Curiosity will provide some important lessons. One key take away: open source has been key to the success of this mission and making its results as widely available as possible in so quickly a time frame.

No comments:

Post a Comment